Overview

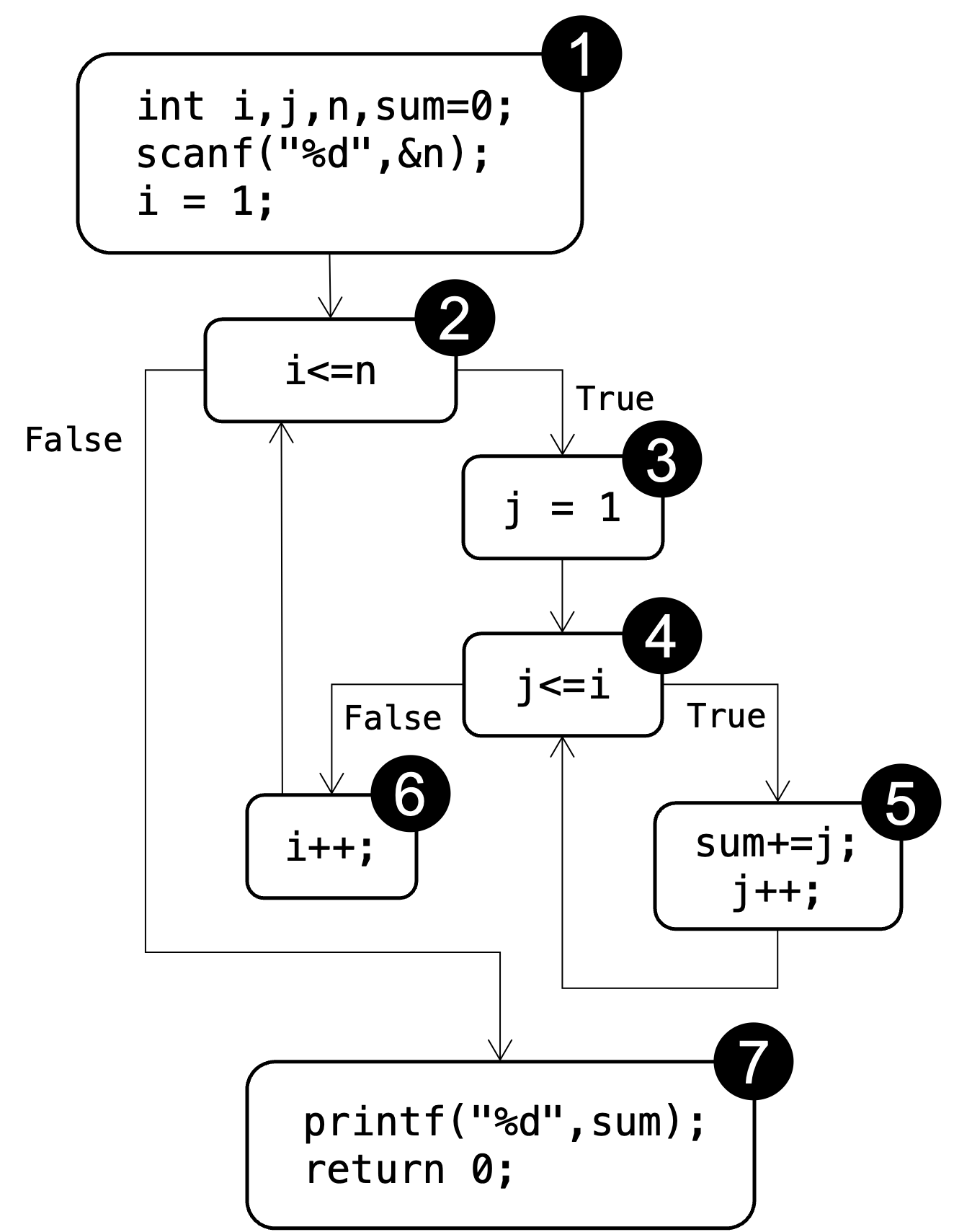

Let's consider a simple programming assignment where students are asked to write a C program that calculates the sum of the first n natural numbers in Figure 1.

The program should take an integer n as input and output the sum of the first n natural numbers.

The student made a mistake in the inner loop condition at line 8.

The correct loop condition should be j<=i instead of j<=N.

In addition to the programs, we also assume to have some tests, which can be used to assess the correctness of the student submission.

In this example, we only have some concrete inputs of interest \(n\in\{2, 4, 10, 3, 1, 20\}\), and the correct behavior can be extracted from the reference implementation.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

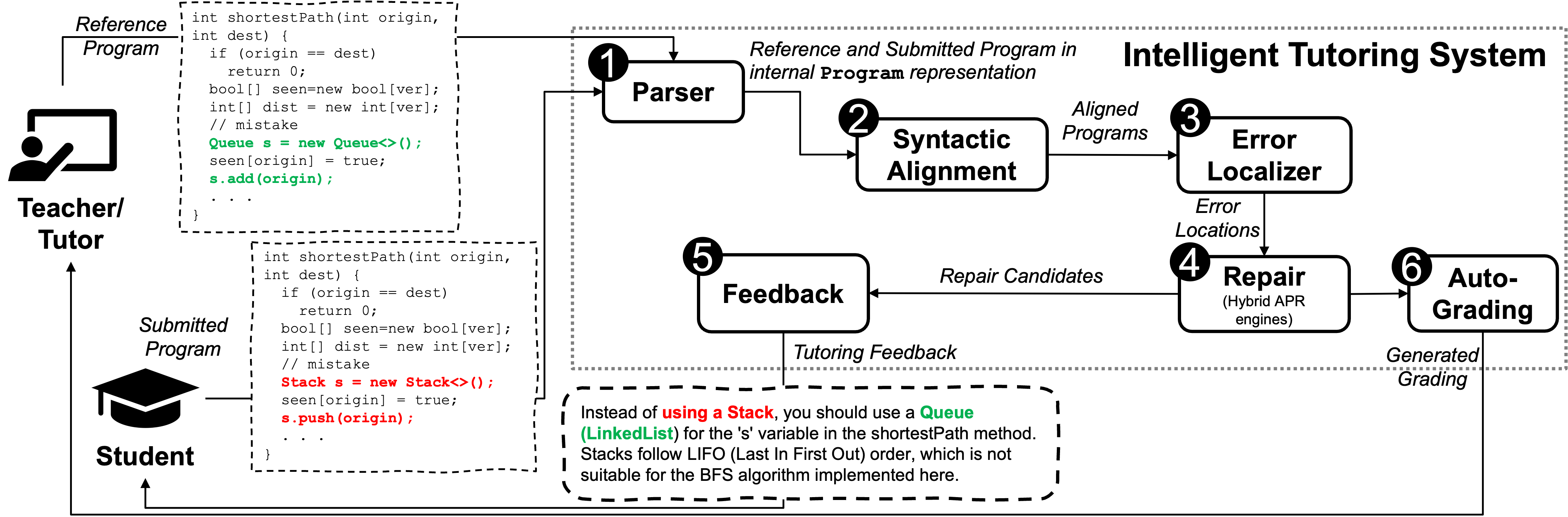

Parser

The reference program and the submitted (incorrect) program are given to the parser component. For each program, it generates the corresponding internal program representation. This representation is based on the Control-Flow Graph (CFG). Finally, the results are passed to the alignment component. The objective of the parser components is to enable the other internal parts of the Intelligent Tutoring System to work independently from a specific programming language. The simplified illustration of the internal CFG-based program representation for the reference program is shown in Figure 2. Note that the (incorrect) student submission has the same structure, i.e., the same number of basic blocks, although a different content in the blocks.

Alignment

The alignment component takes the two Program objects and identifies the matching basic blocks. Therefore, it aligns the two programs with regard to their CFG representation. Moreover, it maps the existing variables for each function inside the programs. The results can later be used to detect (error) locations where the reference and submitted programs behave differently. Additionally, this information helps to attempt the repair/fix of the submitted program by reusing information from the reference program. Our current baseline implementation follows the approach by Clara, which attempts to match the two programs based on their control flow and their variables.

%

In our example, the structure is the same, so the mapping is straightforward. Internally, we keep a mapping for each function and its basic blocks:

\(main:\{1=1, 2=2, 3=3, 4=4, 5=5, 6=6, 7=7\}\)

To build the variable mapping, we use a Define-Use Analysis (DUA) (also see Hu et al.). The resulting variable matching is: \(main=\{i=i, sum=sum, j=j, n=N\}\)

Error Localizer and Interpreter

The error localizer component identifies locations that show erroneous behavior in the submitted program. This enables others components to formulate a repair/fix. The error localizer component has access to the interpreter component to execute test cases while observing the values of variables at specific locations. It can use the interpreter to detect semantic differences between the reference and submitted programs. For our example, we use a trace-based error localizer. It uses the interpreter to execute the inputs for both programs and compares the resulting execution traces.

For the input \(n=2\), our error localizer identifies a value mismatch at location 4.

It also detects which variable or expression holds the first observation of this mismatch: the loop condition in line 7 in the student's submission, j<=N.

Repair Engines

The repair component attempts to fix the submitted program. For example, it can use the mapping to the reference program (see step 2) and the identified error locations (see step 3) to generate so-called local repairs that modify single statements in the submitted program. Multiple local repairs can be combined to represent more complex changes.

The repair process results in a list of plausible repair candidates. It can also use the interpreter component to extract more information from the (correct) reference program.

For our example, we use an ILP-based repair implementation similar to Clara. It uses the reference implementation information to search for a minimal change to transform the student's program into the reference program. The local repair with the smallest repair cost is to change the condition location 6 from j <= N to j <= i.

Additionally, we already integrated other repair strategies like Refactory and particularly allow the use of multiple reference implementations to maximize the chances of structural matching between the student's submission and the reference solution.

Feedback Generators

With the collected information from previous components, the feedback component generates natural language explanations to guide students in correcting their mistakes without revealing the direct answer. This component incorporates a common front-end prompt interface with various LLM backends, allowing flexible switching between different LLMs and easy integration of new LLMs. Currently, it supports both commercial LLMs like GPT and Claude series, as well as open-source LLMs like LLaMA from Meta. We use GPT-3.5 as the default LLM backend to balance performance and cost.